This is the second post in the Decompiling Clojure series, in the first post I showed what Clojure looks like in bytecode.

For this entry, I’ll do a compiler overview, the idea is to understand why and how does Clojure looks like that.

For other decompilation scenarios you don’t usually have the advantage of looking at the compiler internals to guide your decompiling algorithms, so we’ll take our chance to peek at the compiler now.

We will visit some compiler source code, so be warned, there’s Java ahead.

It’s Java

Well, yes, the Clojure compiler targeting the JVM is written in Java, there is an ongoing effort to have a Clojure-in-Clojure compiler, but the original compiler is nowhere near of being replaced.

The source code is hosted on GitHub, but the development process is a little bit more convoluted, which means you don’t just send pull requests for it, it was asked for many times and I don’t think it’s about to change, so if you wanna contribute, just sign the contributors agreement and follow the rules.

The CinC Alternative

The Clojure-in-Clojure alternative is not only different because it’s written in Clojure, but because it’s built with extensibility and modularization in mind.

In the original Clojure compiler you don’t have a chance to extend, modify or use, many of the data produced by the compilation process.

For instance the Typed Clojure project, which adds gradual typing to Clojure, needed a friendlier interface to the compiler analyzer phase. It was first developed by Ambrose Bonnair-Sergeant as an interface to the Compiler analyzer and then moved to be part of the CinC analyzer.

The CinC alternative is modularized in -at least three- different parts.

- The analyzer, meant to be shared among all Clojure compilers (as Clojurescript)

- The JVM analyzer, contains specific compiler passes for the JVM (for instance locals clearing is done here)

- The bytecode emitter, actually emits JVM bytecode.

There’s a great talk from Timothy Baldridge showing some examples using the CinC analyzer, watch it.

Note CinC developer Nicola Mometto pointed out that the analyzer written by Ambrose and CinC are indeed different projects. Which I should’ve noticed myself since

the analyzer by Ambrose uses the analyzer from the original Clojure compiler, which is exposed as a function. Part of my mistake was surely derived from the fact one is called tools.analyzer.jvm and

the other one is called jvm.tools.analyzer

Compilation process

One of supposed advantages of Lisp-like languages is that the concrete syntax is already the abstract syntax. If you’ve read some of the fogus writings about Clojure compilation tough, he has some opinions on that statement:

This is junk. Actual ASTs are adorned with a boatload of additional information like local binding information, accessible bindings, arity information, and many other useful tidbits.

And he’s right, but there’s one more thing, Clojure and Lisp syntax are just serialization formats, mapping to the underlying data structure of the program.

That’s why Lisp like languages are easier to parse and unparse, or build tools for them, because the program data structure is accesible to the user and not only to the compiler.

Also that’s the reason why macros in Lisp or Clojure are so different than macros in Scala, where the pre-processor handles you an AST that has nothing to do with the Scala language itself.

That’s the proper definition of homoiconicity by the way, the syntax is isomorphic with the AST.

Compiler phases

In general compilers can be broken up into three pieces

- Lexer/Parser

- Analyzer

- Emitter

Clojure kind of follows this pattern, so if we’re compiling a Clojure program the very high level approach to the compilation pipeline would be:

- Read file

- Read s-expression

- Expand macros if present

- Analyze

- Generate JVM bytecode

The first three steps are the Reading phase from the fogus article.

There is one important thing about these steps:

Bytecode has no information about macros whatsoever, emitted bytecode corresponds to what you see with macroexpand calls. Since macros are expanded before analyzing, you shouldn’t expect to find anything about your macro in the compiled bytecode, nada, niet, gone.

Meaning, we shouldn’t expect to be able to properly decompile macro’ed stuff either.

Compile vs. Eval

As said on the first post, the class file doesn’t need to be on disk, and that’s better understood if we think about eval.

When you type a command in the REPL it needs to be properly translated to bytecode before the JVM is able to execute it, but it doesn’t mean the compiler will save a class file, then load it, and only then execute it.

It will be done on the fly.

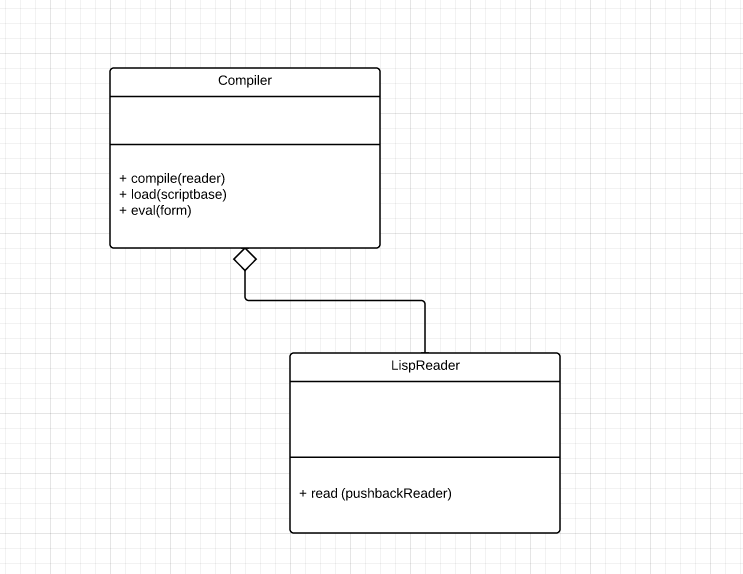

We will consider three entry points for the compiler, compile, load and eval.

The LispReader is responsible for reading forms from an input stream.

Compile Entry Point

compile is a static function found in the Compiler.java file, member of the Compiler class, and it does generate a class file on disk for each function in the compiled namespace.

For instance it will get called if you do the following in your REPL

1

| |

Clojure function just wraps over the Java function doing the actual work with the signature

1

| |

Besides all the preamble, the core of the function is just a loop which reads and calls the compile1 function for each form found in the file.

1 2 3 4 5 | |

As we expect, the compile1 function does macro expansion before analyzing or emitting anything, if form turns out to be a list it recursively calls itself, which is

the then branch of the if test:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | |

The analyze function we see on the else branch does the proper s-expr analyzing which emits and evals itself afterwards, more on analyzing ahead.

Load Entry Point

The load function gets called any time we do a require for a not pre-compiled namespace.

1

| |

For instance, say we do a require for the clojure.core.reducers namespace:

1

| |

The clj file will be read as a stream in the loadResourceScript function and passed as the first rdr parameter of the load function.

You see the load function has a pretty similar read form and eval loop as the one we saw in the compile function.

1 2 3 4 5 | |

Instead of calling compile1 calling eval, which is our next entry point.

Eval Entry Point

eval is the e in REPL, anything to be dynamically evaluated goes through the eval function.

For instance if you type (+ 1 1) on your REPL that expression will be parsed, analyzed and evaluated starting on the eval function.

1

| |

As you see eval receives a form by parameter, since knows nothing about files nor namespaces.

eval is just straightforward analyzing of the form, and there’s not a emit here. This is the simplified version of the function:

1 2 3 | |

The reader

Languages with more complicated syntaxes separate the Lexer and Parser into two different pieces, like most Lisps, Clojure combines these two into just a Reader.

The reader is pretty much self contained in LispReader.java and its main responsibility is given a stream, return the properly tokenized s-expressions.

The reader dispatches reading to specialized functions and classes when a particular token is found, for instance ( dispatches to ListReader class, digits dispatch to the readNumber function and so on.

Much of the list and vector reading classes(VectorReader, MapReader, ListReader, etc) rely on the more generic readDelimitedList function which receives the particular list separator as parameter.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

This is important because the reader is responsible for reading line and column number information, and establishing a relationship between tokens read and locations in the file.

One of the main drawbacks of the reader used by the compiler is that much of the line and column number information is lost, that’s one of the reasons we saw in our earlier post that for a 7 line function only one line was properly mapped, interestingly, the line corresponding to the outter s-expression.

We will have to modify this reader if we want proper debugging information for our debugger.

The analyzer

The analyzer is the part of the compiler that translates your s-expressions into proper things to be emitted.

We’re already familiar with the REPL, in the eval function analyze and emit are combined in a single step, but internally there’s a two step process.

First, our parsed but meaningless code needs to be translated into meaningful expressions.

In the case of the Clojure compiler all expressions implement the Expr interface:

1 2 3 4 5 6 | |

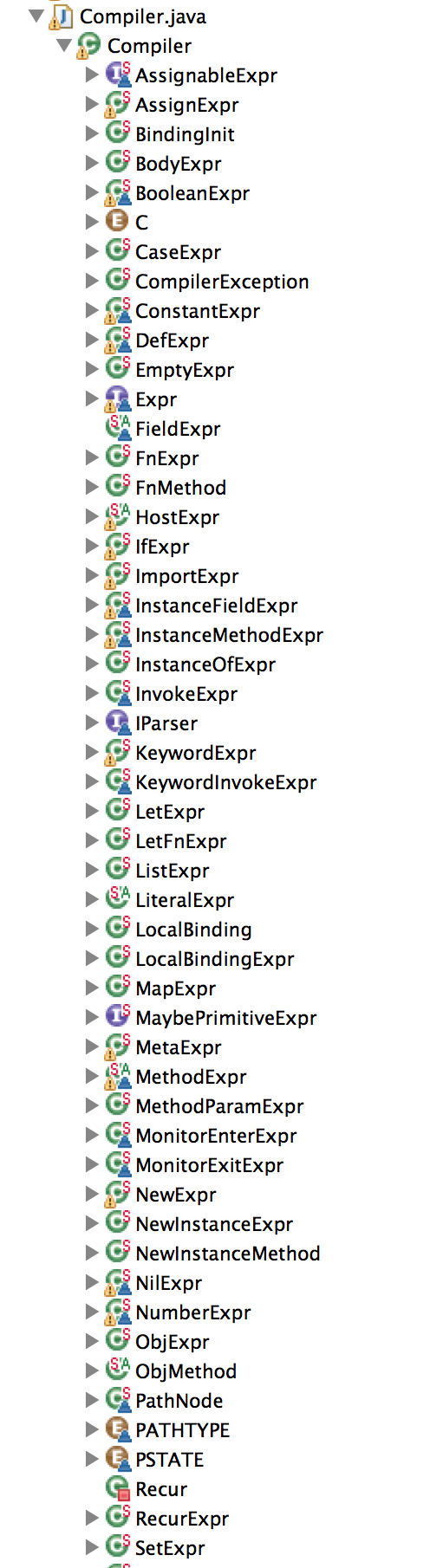

Much of the Clojure special forms are handled here, IfExpr, LetExpr, LetFnExpr, RecurExpr, FnExpr, DefExpr, CaseExpr, you get the idea.

Those are nested classes inside the Compiler class, and for you visualize how many of those special cases exist inside the compiler, I took this picture for you:

As you would expect for a properly modularized piece of software, each expression knows how to parse itself, eval itself, and emit itself.

The analyze function is a switch on the type of the form to be analyzed, just for you to get a taste:

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

And there’s special handling for the special forms which are keyed by Symbol on the same file.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

Analyze will return a parsed Expr, which is now a part of your program represented in the internal data structures of the compiler.

The bytecode generator

As said before it uses ASM so we found the standard code stacking up visitors, annotations, methods, fields, etc.

I won’t enter here into specific details about ASM API since it’s properly documented somewhere else.

Only notice that no matter if code is eval’ed or not, JVM bytecode will be generated.

What’s next

One of the reasons I ended up here when I started working on the debugger was to see if by any means, I could add better line number references to the current Clojure compiler.

As said before and as we saw here, the Java Clojure Compiler is not exactly built for extensibility.

The option I had left, was to modify the line numbers and other debugging information at runtime, and that’s what I will show you on the next post.

I will properly synchronize Clojure source code with JVM Bytecode, meaning I will synchronize code trees, that way I will not only add proper line references, but I will know

which bytecode corresponds with which s-expression in your source.

Doing Clojure I usually end up with lines of code looking like this:

1

| |

What use do I have for a line base debugger with that code??

I want an s-expression based debugger, don’t you?

One more reason we have to envy Dr Racket, whose debugger already knows about them.

Stay tuned to see it working on the JVM.

Meanwhile, I’m guilespi on Twitter.