This post is about Clojure reducers, but what makes them great are the ideas behind the implementation, which may be portable to other languages.

So if you’re interested in performance don’t leave just yet.

One of the primary motivators for the reducers library is Guy Steele’s ICFP ‘09 talk, and since I assume you don’t have one hour to spend verifying I’m telling you it’s worth watching, I’ll do my best to summarize it here, which is a post you will probably scan in less than 15 seconds.

One of the main points of the talk is that the way we’ve been thinking about programming for the last 50 years isn’t serving us anymore.

Why?

Because good sequential code is different from good parallel code.

Parallel vs. Sequential

1 2 3 4 5 6 7 | |

The accumulator loop

How would you sum all the elements of an array?

1 2 3 4 | |

That’s right, the accumulator loop, you initialize the accumulator and update the thingy in each iteration step.

But you’re complecting how you update your sum with how you iterate your collection, ain’t it?

There’s a difference between what you do with how you do it. If you say SUM(X) it doesn’t make promises on the strategy,

it’s when you actually implement that SUM that the sequential promise is made.

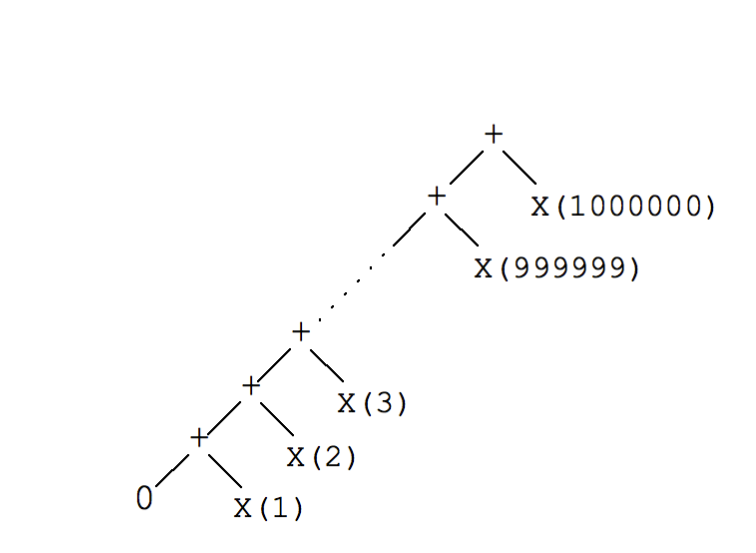

The problem is the computation tree for the sequential strategy, if we remove the looping machinery and leave only the sums, there’s a one million steps delay to get to the final result.

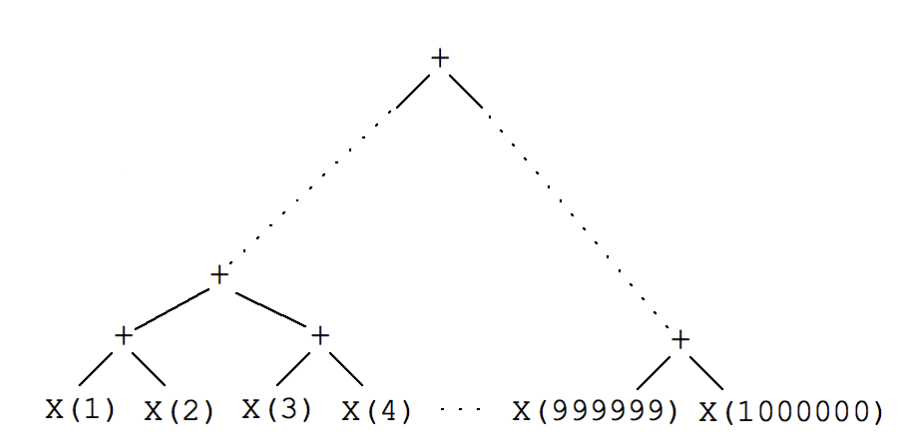

So what’s the tree you would like to see?

And what code you would need to write in order to get that tree? Functional code?

Think again.

Functional code is not the complete answer, since you can write functional code and still have the same problem.

Since linear linked lists are inherently sequential you may be using a reducer and be on the same spot.

1

| |

We need multiway decomposition.

Divide and conquer

Rationale behind multiway decomposition is that we need a list representation that allows for binary decomposition of the list.

You can obviously have many redundant trees representing the same conceptual list, and there’s value in redundancy since different trees have different properties.

Summary

- Don’t split a problem between first and rest, split in equal pieces.

- Don’t create a null solution an successively update it, map inputs to singleton solutions and merge tree-wise.

- Combining solutions is trickier than incremental updates.

- Use sequential techniques near the leaves.

- Programs organized for parallelism can be processed in parallel or sequentially.

- Get rid of cons.

Clojure Reducers

So what are Clojure reducers?

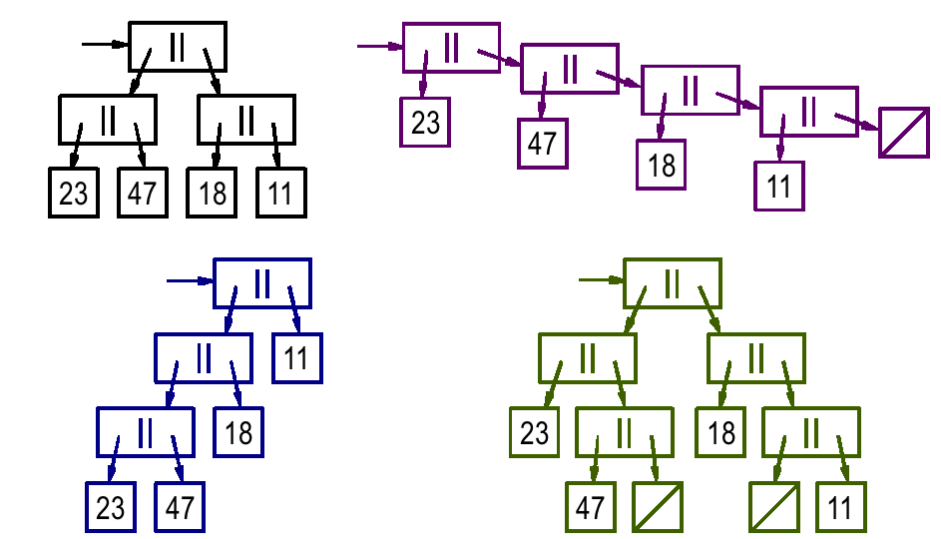

In short, it’s a library providing a new function fold, which is a parallel reduce+combine

that shares the same shape with the old sequence based code, main difference been you get to

provide a combiner function.

Go and read this and this great posts by Rich Hickey.

Back? Ok…

As Rich says in his article the accumulator style is not absent but the single initial value

and the serial execution promises of foldl/r have been abandoned.

For what it’s worth, I’ve written in Clojure the Split a string into words parallel

algorithm suggested by Steele here, performance sucks compared against

clojure.string/split but it’s a nice algorithm none the less.

1 2 3 4 5 6 7 8 | |

There are a couple interesting things in the code.

combine-statesis the new combiner function, decides how to combine different splits100is the size when to stop splitting and do a sequential processing (callsreduceafterwards). Defaults to512.- The

fnis the standard reducing function - The list is transformed into a

vectorbefore processing.

Last step is just for the sake of experimentation, and has all to do with the underlying structure for vectors.

Both vectors and maps in Clojure are implemented as trees, which as we saw above, is one of the requirements for multiway decomposition.

There’s a great article here about Clojure vectors, but key interest point is that it provides

practically O(1) runtime for subvec, which is how the vector folder foldvec successively splits the input vector before reaching the

sequential processing size.

So if you look at the source code only for vectors and maps actual fork/join parallelism happens, and standard reduce is called for linear lists.

1 2 3 4 5 6 7 8 9 10 | |

What I like the most about reducers is that reducer functions are curried, so you can compose them together as in:

1 2 3 | |

It’s like the utmost example of the simple made easy Hickey’s talk, where decomplecting the system, results in a much simpler but powerful design at the same time.

I’m guilespi at Twitter